java实现hdfs文件操作

作者:Zhan-bin

日期:2018-7-3

1.导包

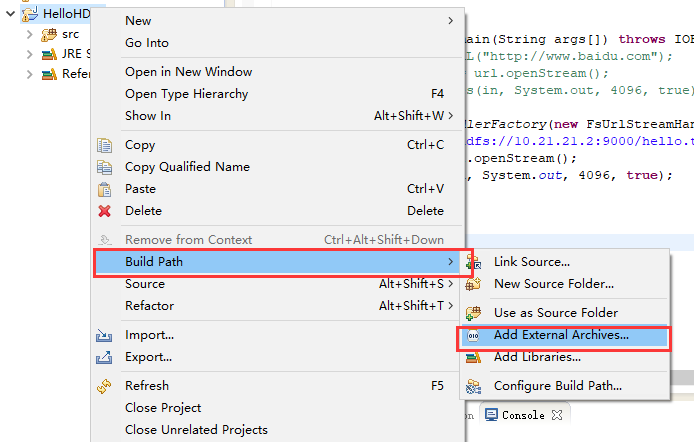

- 在项目文件夹右键- Build Path - Add External Archive

- 将hadoop解压后找到share文件夹进入hadoop文件夹

- common文件夹里面需要导入的包: hadoop-common-2.9.1.jar和lib里面的所有包

- hdfs文件夹里面需要导入的包:hadoop-hdfs-2.9.1.jar,hadoop-hdfs-client-2.9.1.jar

2.各种文件操作代码

上传文件

1 | /* |

创建文件

1 | /* |

删除文件

1 | /* |

读取文件

1 | /** |

创建文件夹(目录)

1 | /** |

删除文件夹(目录)

1 | /** |

列出指定目录下的所有文件

1 | public static void listAll(String dir) throws IOException { |